PostgreSQL upgrading

Introduction

This is a digest of the relevant information that one might find useful to consult when upgrading from or to any PostgreSQL version.

Upgrading can be a mandatory operation due to a release reaching the EOL after 5 years of support as dictated by the PostgreSQL official versioning policy

Information about the last minor release of the EOL major version are also published. See for example: PostgreSQL 9.6 EOL Approaching as reported by the notes of the announce of the 12th August 2021: PostgreSQL 13.4, 12.8, 11.13, 10.18, 9.6.23, and 14 Beta 3 Released!:

PostgreSQL 9.6 will stop receiving fixes on November 11, 2021. If you are running PostgreSQL 9.6 in a production environment, we suggest that you make plans to upgrade to a newer, supported version of PostgreSQL.

i.e. the next and LAST minor releases for 9.6 will be 9.6.24 - November 11th, 2021 which implies that after the EOL date, no more bug and security fixes (or backports) will be published.

Our DBOD versioning policy aim to support a major version for three years (instead of the five years normally covered until the official postgreSQL EOL) and to request - at least once per year - a mandatory minor upgrade to the latest level available for all the major versions supported.

Reasons for upgrading

There are many good reasons to keep your database engine up to date like bug and security fixes that are continuously released with each minor release but also new features, improvements and other advancements normally coming with a major release.

Just to give an idea and name a few, in recent years there have been substantial improvements in partitioning, parallelism, concurrent online operations, replication and performance. In some cases, you can easily get very interesting improvements in performance, just by upgrading the db engine.

A good starting point to gather a more detailed picture of what your application and users are missing out is to consult the Feature Matrix but there are also many web sites focusing on particular aspects and versions.

Just to mention a couple of examples, you could have a look at the huge list compiled at Why upgrade PostgreSQL? Upgrading from 9.6.20 to 12.7 gives you 6.1 months worth of fixes (974 of them) or at the features and improvements pointed out at Just Upgrade: How PostgreSQL 12 Can Improve Your Performance

Overview of the upgrading process

Major releases can contain changes to internal structures and configuration that are not backward-compatible. Depending on a number of co-factors some application can stop working as expected or experience degraded performance. As a consequence, it is not generally advisable to run major upgrades automatically.

Before proceeding with the upgrade of a production instance, we strongly suggest to run extensive tests on a clone to verify that:

- the upgrade process itself can be completed successfully

- the elapsed and down time required are compatible with your constraints

- there are no unresolvable or blocking issues such that the upgrade is not viable

To apply the upgrade, we use the pg_upgrade utility which is very useful to leverage what is described in the PostgreSQL documentation:

...Major releases often change the layout of the system tables, but the internal data storage format rarely changes. pg_upgrade uses this fact to perform rapid upgrades by creating new system tables and simply reusing the old user data files...

You can find in the official documentation further information about upgrading a cluster, with potential issues and methods: dump/restore, pg_upgrade, logical replication

In addition to the standard DBOD features of backup and point-in-time restore, which are always possible, as part of our major upgrade procedure we take an additional backup snapshot, just before the upgrade, to make sure that downgrading to the state before the upgrade is easier and faster and more likely to succeed.

For information about backup and restore please consult the Backup and restore management section of this guide.

Minor upgrades

As it is well explained in the official documentation at Upgrading a PostgreSQL Cluster,

Minor releases never change the internal storage format and are always compatible with earlier and later minor releases of the same major version number. For example, version 10.1 is compatible with version 10.0 and version 10.6. Similarly, for example, 9.5.3 is compatible with 9.5.0, 9.5.1, and 9.5.6. To update between compatible versions, you simply replace the executables while the server is down and restart the server. The data directory remains unchanged — minor upgrades are that simple.

In a short summary, our main task in this case is to package and install the new binaries across all our hosts and change the puppet configuration accordingly, so that via a rundeck job, we can: stop the server, point the service to use the new binaries, restart the server.

When MINOR upgrading a primary/replica cluster, it is recommended to upgrade the primary only after successfully upgrading the replica. The upgraded replica should be able to read the WAL produced by the primary while they are still in the oldest minor format while the reverse is more at risk of failing.

How is the upgrading process activated

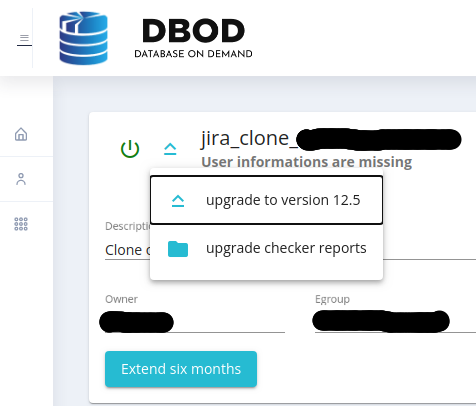

Any available upgrade will only be activated after a successful run of the upgrade checker. If the check is successful the upgrade button on the page of the DBOD web portal corresponding to checked instance will be activated. The version(s) to which is possible to upgrade to are presented when clicking on the upgrade button.

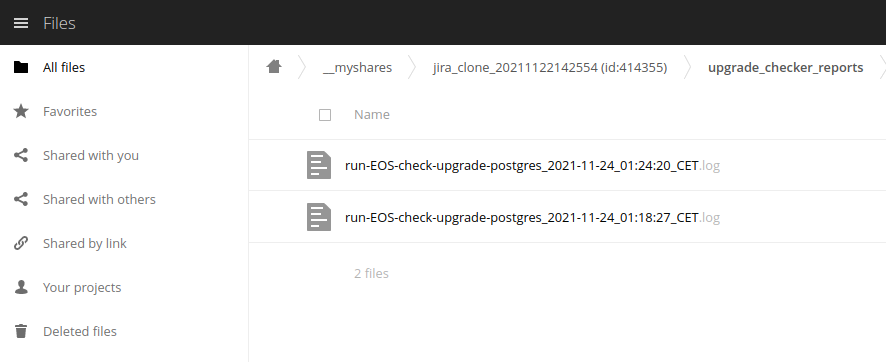

The "upgrade checker reports" click-able entry allows direct access to the instance report(s) produced by the checker in CERNBox to check for errors and any other relevant information including warnings and actions required to comply with requirements and enable the upgrade.

Please get in touch with us by opening a SNOW ticket to discuss any need or doubt that you might have on the upgrading, independently from the status of the upgrade button of your instance.

Some upgrades can also be enabled by the DBOD team across the entire estate programmatically (for example for the annual minor upgrades campaign) or by implementing manually the relevant ad-hoc upgrade rules and related configuration.

PostgreSQL extensions

PostgreSQL extensions are not upgraded as part of a major version engine upgrade. If that is required, they must be upgraded separately, one by one.

Some extension's versions are only supported on some specific PostgreSQL releases. When upgrading to a new PostgreSQL major release they might or might not need to be updated. Some come pre-built and packaged with PostgreSQL libraries other need a specific building procedure.

You can check the version of installed extensions in your instance with:

SELECT * FROM pg_extension;

and all the available versions with:

SELECT * FROM pg_available_extension_versions;

Certain installed extensions might need to be updated to the latest available version BEFORE or AFTER the upgrade with:

ALTER EXTENSION extension_name UPDATE TO 'new_version';

These activities will have to be done by the DBOD team although - whenever possible - we will aim to take care of them during the upgrade job.

Please be aware that some extensions might become incompatible with the new PostgreSQL release and will have to be dropped before upgrading.

To minimize the impact of any potential issue related with extensions, our recommendation is to test the upgrade on a clone and/or to request a brand new empty instance of the desired new major release to verify what extensions and versions will come with it.

Please refer to the specific extension documentation for further details. The contrib extensions are documented in the official PostgreSQL documentation at Appendix F. Additional Supplied Modules

Please do not hesitate to open a SNOW ticket to discuss your case or if you encounter any issue during the testing of the upgrade.

DBOD upgrading best practices

Considering all the relevant information, our recommendations to DBOD users are:

- keep up with the latest minor release available in DBOD (for the major version in use) to benefit from latest security and bug fixes

- always test a major upgrade beforehand on a clone to reduce the risk of unexpected and unwanted issues and to have an idea of the required downtime

- start making plans to upgrade to a more recent major version, according to the schedule inferred from the versioning policy (or as soon as a new version is announced) to make space for the testing effort, avoid last minute pressure and have sufficient time to work around any emerging issue

- be proactive, plan in advance, choose to run the upgrade when it is more convenient in terms of testing effort (for major upgrades) and when the impact of the downtime will be affordable and/or minimized

- failing to upgrade within the allocated time frame to the latest minor release, or to a more recent major version (when current version is EOL), will result in a forced upgrade which in turn can place a lot of effort on the DBOD team

- a forced MINOR upgrade normally requires a brief downtime but no unwanted consequences

- a forced MAJOR upgrade is exactly the same as upgrading without testing and could potentially result in: unwanted and serious consequences, significant downtime, avoidable pressure and stress for anyone affected or involved.

DBOD practical suggestions

- Before upgrading your production instance, we recommend to validate first the upgrade process in a test instance of the same version, or by requesting a clone from the DBOD web portal. The clone will normally remain available for one month.

- The execution of the upgrade checker job on a specific instance can be done from the web interface to verify in advance any potential blocking issue directly on the production instance or on the test clone.

- It is strongly recommended to run extensively all parts of your application against the upgraded test instance to identify any potential issue and be sure that everything will work as expected or that you know what issue might arise and how to solve or work around them. In some cases, rebuilding of some/all indexes might be required (or recommended) after the upgrade, before start using the upgraded database.

- The upgrade procedure is normally taking care of running - just after the pg_upgrade step is successfully completed - a

VACUUM (VERBOSE, ANALYZE)to compact and defragment all objects in the cluster. Parallel jobs are used depending on the size of database. The empty space reclaimed by compacting the data will be accumulated at the end of the allocated extents and NOT released back to the Operating System (it will not shows as a reduction in the monitoring of data size). The ANALYZE option is for gathering fresh optimiser statistics. This operation can run concurrently with normal activity but some slowness might be experienced, especially in very active databases. If you do not have urgent reasons to reopen the cluster immediately, waiting until the VACUUM (i.e. the overall upgrade job) is successfully completed will allow the operation to run smoothly and complete in less time and without interfeering with the users activity. - Please be aware that TOP LEVEL PARTITIONED TABLES (normally empty) ARE NOT ANALYZED AUTOMATICALLY. We recommend to identify and analyze tables without optimizer statistics by running the following command with psql in each user owned database:

SELECT 'ANALYZE VERBOSE ' || schemaname || '.' || relname || ';' AS stmt FROM pg_stat_user_tables WHERE last_analyze IS NULL AND last_autoanalyze IS NULL;\gexec - If you have concerns about the required downtime, testing all operations beforehand on a clone, should provide an approximate but good enough estimate.

- Check the Known Issues section below, the official release notes and in particular the Migration sections. Although you can upgrade directly from one major version to another you should read the major release notes of all intervening versions.

- When upgrading a Highly Available or a Primary/Replica cluster, you can create a clone of the primary for the testing but you should open a SNOW ticket at your earliest convenience to identify the correct procedure to adopt and to fix an intervention date to actually perform the upgrade in collaborative manner.

Release notes

The PostgreSQL official documentation is an exhaustive source of information for all the fixes, changes and new features coming with a new release. Please refer to the official release notes associated with the version you want to upgrade to, to consult the sections and issues of interest for your use case and to check any special recommendation related with upgrading to that version.

New Features and Noticeable Improvements

We might be able to compile a list of features and improvements which are noticeable and likely to be of interest for the DBOD users also based on their results and the feedback received. They will be documented here, and in the sections below, which for the time being are left intentionally blank.

SQL and Applications

- intentionally blank, not yet compiled

Administration

- intentionally blank, not yet compiled

Security & Account Management

- intentionally blank, not yet compiled

Known Issues & Unwanted Collateral Effects

when upgrading to 13 or higher

-

If your instance is running on a CentOS 7 host it will need to be migrated first to a RHEL 8 host but when doing this we will need to take care of a silent GNU C library (glibc) locale change

PostgreSQL uses locale data provided by the operating system’s C library for sorting text. Sorting happens in a variety of contexts, including for user output, merge joins, B-tree indexes, and range partitions. In the latter two cases, sorted data is persisted to disk. If the locale data in the C library changes during the lifetime of a database, the persisted data may become inconsistent with the expected sort order, which could lead to erroneous query results and other incorrect behavior.This issue can result in duplication occurring in PK or UK indexes. In order to prevent any issue a REINDEX of all potentially affected indexes is necessary. It will be run as an additional step at the end of the migration before resuming activity. As a consequence the donwtime to migrate the instance can increase, depending on the # and size of the indexes to rebuild.